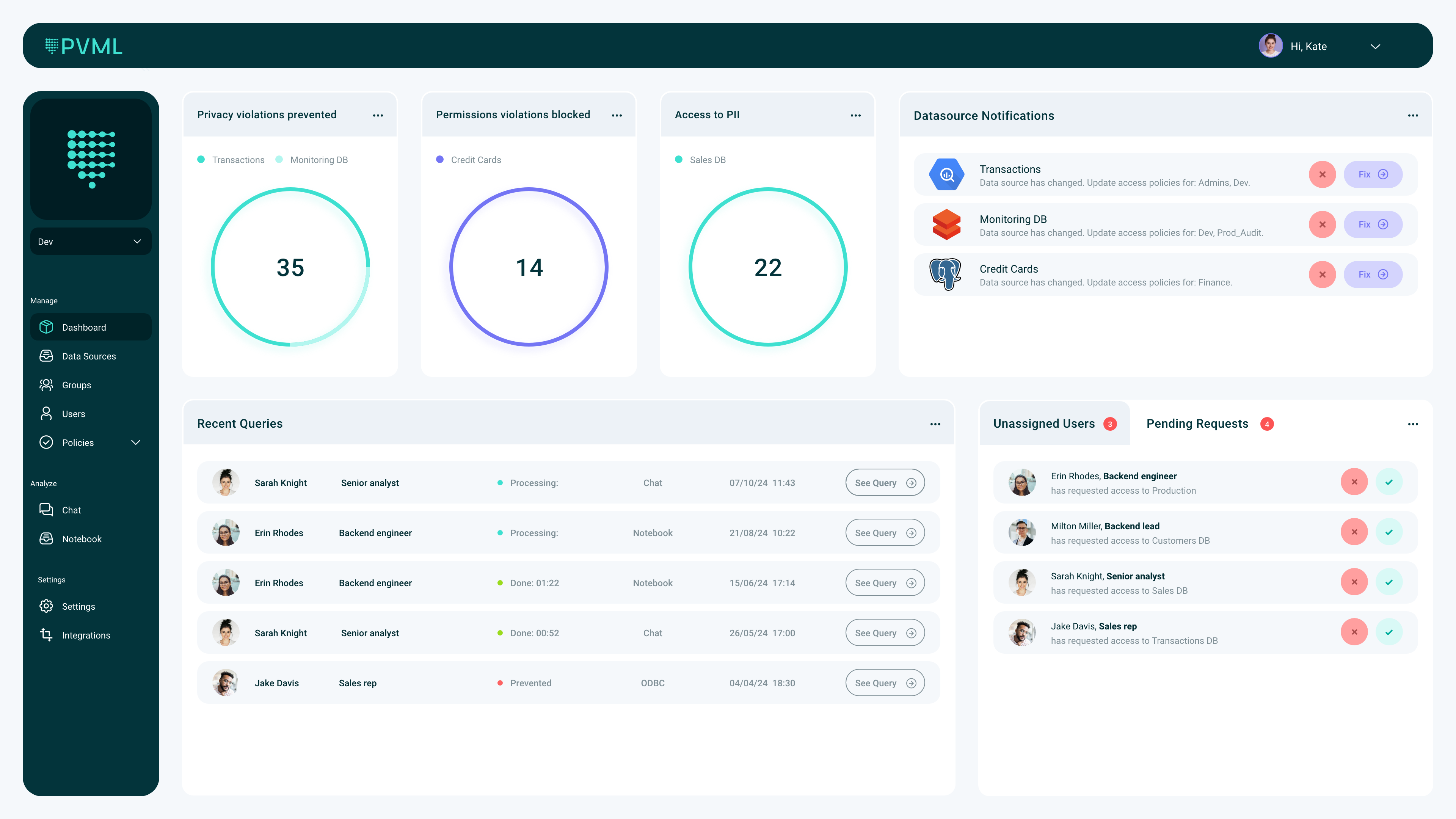

Enterprises are hoarding more data than ever to fuel their AI ambitions, but at the same time, they are also worried about who can access this data, which is often of a very private nature. PVML is offering an interesting solution by combining a ChatGPT-like tool for analyzing data with the safety guarantees of differential privacy. Using retrieval-augmented generation (RAG), PVML can access a corporation’s data without moving it, taking away another security consideration.

The Tel Aviv-based company recently announced that it has raised an $8 million seed round led by NFX, with participation from FJ Labs and Gefen Capital.

Image Credits: PVML

The company was founded by husband-and-wife team Shachar Schnapp (CEO) and Rina Galperin (CTO). Schnapp got his doctorate in computer science, specializing in differential privacy, and then worked on computer vision at General Motors, while Galperin got her master’s in computer science with a focus on AI and natural language processing and worked on machine learning projects at Microsoft.

“A lot of our experience in this domain came from our work in big corporates and large companies where we saw that things are not as efficient as we were hoping for as naive students, perhaps,” Galperin said. “The main value that we want to bring organizations as PVML is democratizing data. This can only happen if you, on one hand, protect this very sensitive data, but, on the other hand, allow easy access to it, which today is synonymous with AI. Everybody wants to analyze data using free text. It’s much easier, faster and more efficient — and our secret sauce, differential privacy, enables this integration very easily.”

Differential privacy is far from a new concept. The core idea is to ensure the privacy of individual users in large data sets and provide mathematical guarantees for that. One of the most common ways to achieve this is to introduce a degree of randomness into the data set, but in a way that doesn’t alter the data analysis.

The team argues that today’s data access solutions are ineffective and create a lot of overhead. Often, for example, a lot of data has to be removed in the process of enabling employees to gain secure access to data — but that can be counterproductive because you may not be able to effectively use the redacted data for some tasks (plus the additional lead time to access the data means real-time use cases are often impossible).

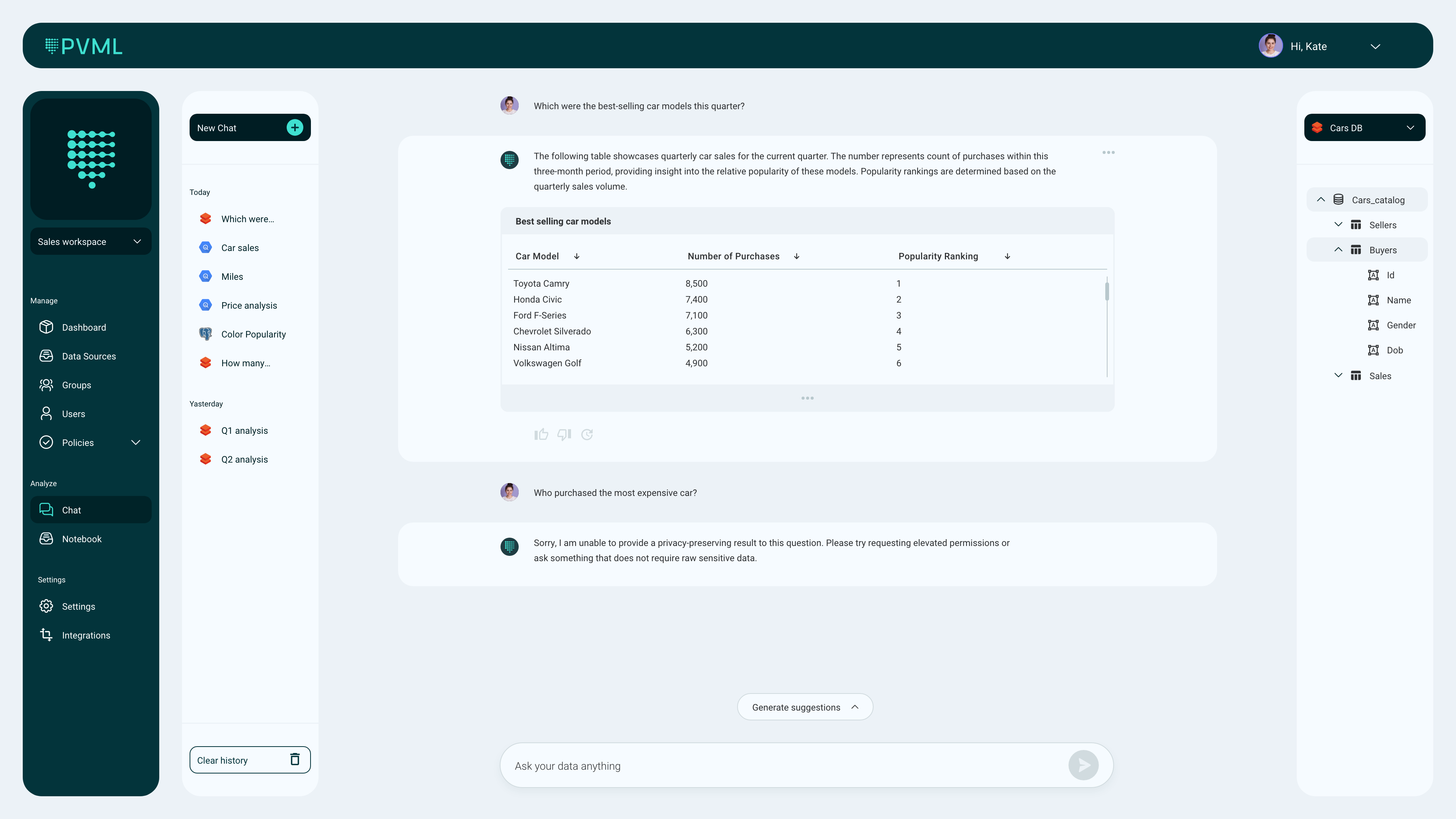

Image Credits: PVML

The promise of using differential privacy means that PVML’s users don’t have to make changes to the original data. This avoids almost all of the overhead and unlocks this information safely for AI use cases.

Virtually all the large tech companies now use differential privacy in one form or another, and make their tools and libraries available to developers. The PVML team argues that it hasn’t really been put into practice yet by most of the data community.

“The current knowledge about differential privacy is more theoretical than practical,” Schnapp said. “We decided to take it from theory to practice. And that’s exactly what we’ve done: We develop practical algorithms that work best on data in real-life scenarios.”

None of the differential privacy work would matter if PVML’s actual data analysis tools and platform weren’t useful. The most obvious use case here is the ability to chat with your data, all with the guarantee that no sensitive data can leak into the chat. Using RAG, PVML can bring hallucinations down to almost zero and the overhead is minimal since the data stays in place.

But there are other use cases, too. Schnapp and Galperin noted how differential privacy also allows companies to now share data between business units. In addition, it may also allow some companies to monetize access to their data to third parties, for example.

“In the stock market today, 70% of transactions are made by AI,” said Gigi Levy-Weiss, NFX general partner and co-founder. “That’s a taste of things to come, and organizations who adopt AI today will be a step ahead tomorrow. But companies are afraid to connect their data to AI, because they fear the exposure — and for good reasons. PVML’s unique technology creates an invisible layer of protection and democratizes access to data, enabling monetization use cases today and paving the way for tomorrow.”

techcrunch.com