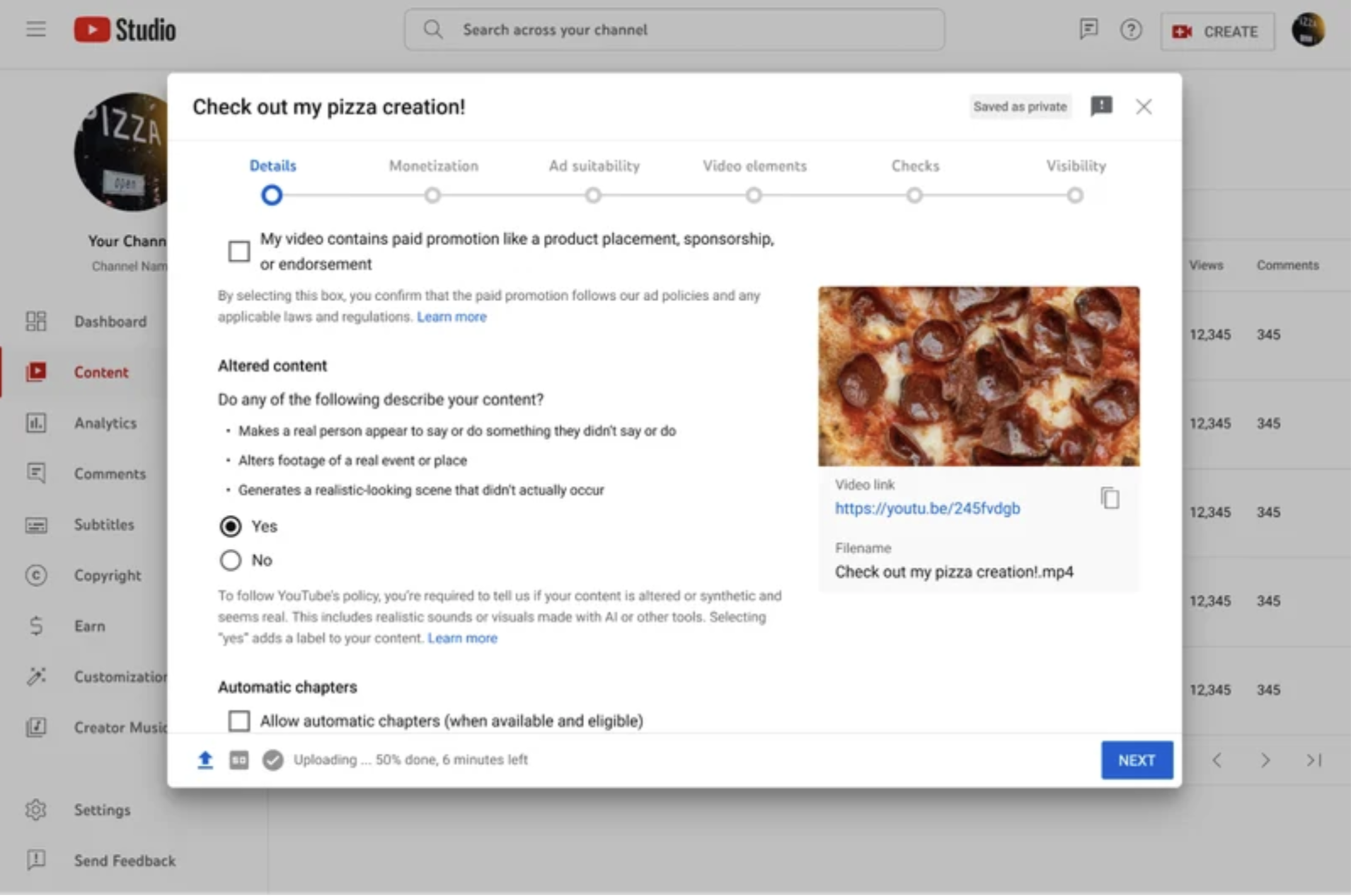

YouTube is now requiring creators to disclose to viewers when realistic content was made with AI, the company announced on Monday. The platform is introducing a new tool in Creator Studio that will require creators to disclose when content that viewers could mistake for a real person, place or event was created with altered or synthetic media, including generative AI.

The new disclosures are meant to prevent users from being duped into believing that a synthetically created video is real, as new generative AI tools are making it harder to differentiate between what’s real and what’s fake. The launch comes as experts have warned that AI and deepfakes will pose a notable risk during the upcoming U.S. presidential election.

Today’s announcement comes as YouTube announced back in November that it was going to roll out the update as part of a larger introduction of new AI policies.

YouTube says the new policy doesn’t require creators to disclose content that is clearly unrealistic or animated, such as someone riding a unicorn through a fantastical world. It also isn’t requiring creators to disclose content that used generative AI for production assistance, like generating scripts or automatic captions.

Image Credits: YouTube

Instead, YouTube is targeting videos that use the likeness of a realistic person. For instance, creators will have to disclose when they have digitally altered content to “replace the face of one individual with another’s or synthetically generating a person’s voice to narrate a video,” YouTube says.

They will also have to disclose content that alters the footage of real events or places, such as making it seem as though a real building caught on fire. Creators will also have to disclose when they have generated realistic scenes of fictional major events, like a tornado moving toward a real town.

YouTube says that most videos will have a label appear in the expanded description, but for videos that touch on more sensitive topics like health or news, the company will display a more prominent label on the video itself.

Viewers will start to see the labels across all YouTube formats in the coming weeks, starting with the YouTube mobile app, and soon on desktop and TV.

YouTube plans to consider enforcement measures for creators who consistently choose not to use the labels. The company says that it will add labels in some cases when a creator hasn’t added one themselves, especially if the content has the potential to confuse or mislead people.

techcrunch.com